The previous lab from February had the students create maps based on UTM gridsthat had a navigation function using paces that were taken from a measured area. This data was then inserted into a map that would then be used for navigation in the field. Using these field maps in the area of study it was possible to use GPS and the created maps to find and collect points that were assigned to the group.

Area of Study:

The area of study is the Priory. The Priory is a UWEC returning student housing area for non traditional students. It was originally a priory for monks before it was re purposed for student housing. This is a residence hall that is set in 120 acres of woods that is inter-cut with semi moderated trails. Since this area is in the wilderness with a large area of woods it is easy to get lost and difficult to traverse.

Methods:

The original maps that were created in February were used to navigate the priory. before setting forth and collected the GPS coordinates onto a GPS device it was important to record the area that was hiked along the way. The GPS that was utilized has a function to switch from Lat and Long to UTM grid system. This was used to correspond to the maps that were originally created. It was at this point that we would have to find a way to prove we made it to the UTM points that were assigned to the group. This was accomplished by collecting a point in the GPS unit and by taking a picture that had location enabled. once the initial set up is completed the group decided to plot the assigned points onto the map to create a ease of access and navigation area. This was sort of a rudimentary navigation chart.

UTM Grid Map used (credit Rachel Hopps)

Once the initial set up was complete with the GPS unit and the map it was time to navigate to the points plotted. At the start up the group decided that it would be best to stick to areas that looked like they had a trail attached. This allowed the group to move from trail to point in a quick succession. However some points were located deep in the woods. to move quickly from point to point we jumped as fast as possible from point to point by going in as straight as lines as possible. This was difficult because of the heavy vegetation in some areas that forced the group to take drastically different directions in order to navigate the area which can be seen on the tracking data.

Collecting the first point with Rachel and Joseph

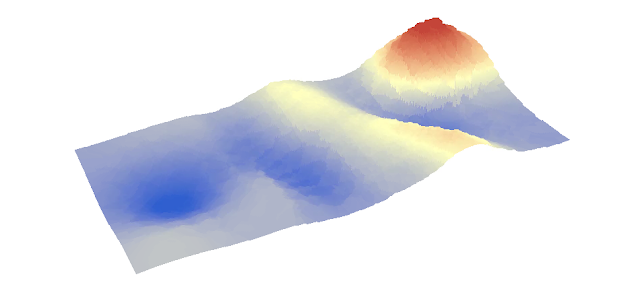

One known problem that the group noticed is that the compass on the GPS was not the greatest for quick turn around. The compass is electronic and would take a few moments to adjust to the direction of the group. This made travel frustrating as the group would have to re adjust every 10 meters or so. Other sources of frustration came from the heavy amounts of vegetation and brambles about. The vegetation made travel difficult and with the brambles, sometimes painful. The map also did not contain some data that would be useful to the group. The data that would have been useful to the group would have been elevation data in the form of topographic maps since some of the points were in an elevated position this data would be useful and should not have been excluded

Tracking Path and collected coordinates

Results:

For the majority of the experience we can see that the group made short work of the points with only a couple of instances where the point collected was difficult to traverse to. this is because the initial planning allowed the group to plan an approach as well as having a set job for each person. one person would be using the GPS unit for measuring the tracking and navigating with a compass, another would be using the UTM map to geed the coordinates that were needed to the GPS user and to count pace. The third and last person would be breaking the trail up for ease of travel and would take pictures and notes. By using a three person team it was possible to create a sort of oblong circle to collect all the points which were then inserted into ArcGIS

Conclusion:

Collecting data in the field is the prime example of geography. Some agencies require field methods and the ability to create a accurate map and to use the GPS and UTM coordinate map created for a Area of study is invaluable for many companies and profiles. This area of study and map creation and field navigation is useful for surveying, search and rescue and many other applications.